eks-addons-vpc-cni¶

github¶

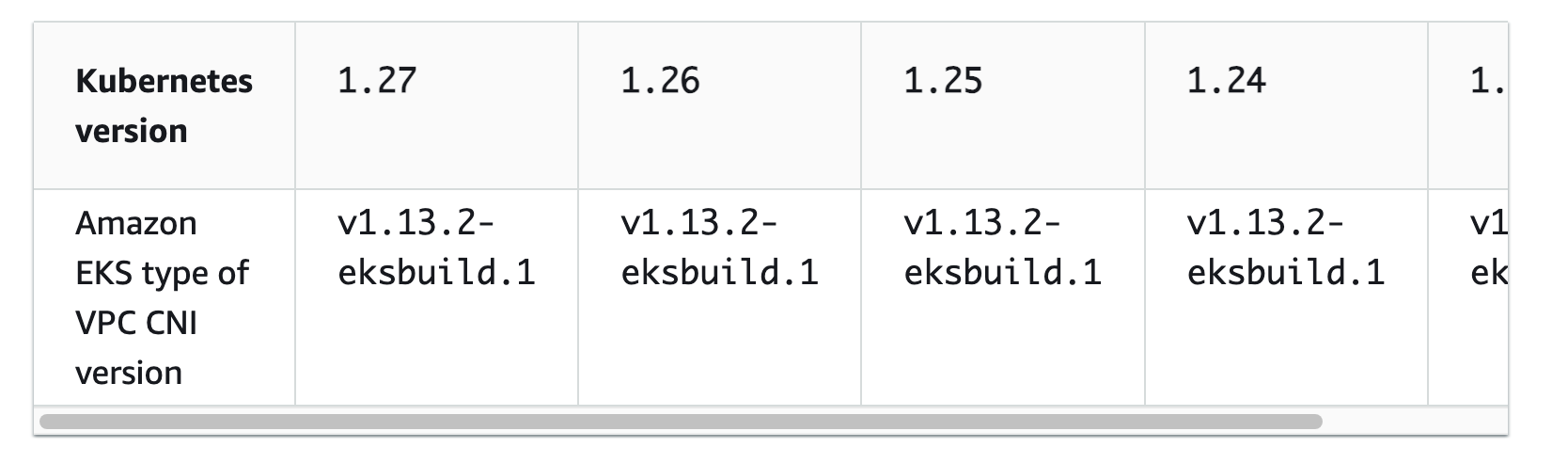

latest version

20230731 update: 1.13.3-eksbuild.1

Upgrading (or downgrading) the VPC CNI version should result in no downtime. Existing pods should not be affected and will not lose network connectivity. New pods will be in pending state until the VPC CNI is fully initialized and can assign pod IP addresses. In v1.12.0+, VPC CNI state is restored via an on-disk file: /var/run/aws-node/ipam.json. In lower versions, state is restored via calls to container runtime.

Updating add-on¶

from webui (prefer)¶

works

select PRESERVE

from cli¶

not success

-

check addon version

-

backup

-

upgrade

re-install¶

# arn:aws:iam::xxx:role/eksctl-ekscluster1-addon-vpc-cni-Role1

# --configuration-values '{"env":{"ENABLE_IPv4":"true","ENABLE_IPv6":"false"}}'

aws eks create-addon --cluster-name ${CLUSTER_NAME} \

--addon-name vpc-cni --addon-version v1.13.2-eksbuild.1 \

--service-account-role-arn arn:aws:iam::xxx:role/eksctl-ekscluster1-addon-vpc-cni-Role1 \

--resolve-conflicts OVERWRITE

# aws eks delete-addon --cluster-name ekscluster1 --addon-name vpc-cni

pod num on node¶

ENI numbers of instance

IP addresses of ENI

https://github.com/aws/amazon-vpc-cni-k8s/blob/master/pkg/vpc/vpc_ip_resource_limit.go

the number of ENIs for the instance type × (the number of IPs per ENI - 1)) + 2